Cyclops - A Spatial AI based Assistant for Visually Impaired People

Collaborators: Malav Bateriwala, Vishruth Kumar

Our project was shortlisted for the OpenCV Spatial-AI competetion sponsored by Intel and OpenCV.org. We were among the top 32 teams selected out of 235 teams all over the world.

We received an OAK-D (OpenCV AI Kit with Depth-Sensing Capability) to develop a working prototype of Cyclops.

Project in brief

Cyclops is a spatial AI-based assistant for visually impaired people. It is assembled in a device similar to a VR headset and allows the wearer to ask if any objects matching the speech input are found nearby and provides active feedback when approaching found objects.

Problem description

We propose Cyclops as a spatial AI-based assistant for visually impaired people. It is a system assembled in a device similar to a VR headset. The user asks Cyclops to search for a particular object. For example, ’ Cyclops, can you see bottle?’ Cyclops searches for the object and guides the visually impaired user using active audio feedback. The overall problem statement consists of two main stages:

- Determining the object to be searched based on the user input

This can be done using NLP based speech recognition methods. The input is provided in the form of a speech signal by the user, which is converted to a text signal using speech recognition methods. The generated text signal is used to determine the object to be searched.

- Guiding the user to the target object

Object detection can be performed using the OAK-D neural inference functionality. If the object is detected, audio feedback will be generated, along with additional information about its location with respect to the user. e.g., ”Object at 3 meters in the right” or ”Object is far away in the left.” The approximate distance of the target object will be calculated using the depth functionality of OAK-D. The audio feedback will be generated continuously based on the updated values from OAK-D until the user reaches the object.

Finally, the user will be informed when the object is close enough to be held.

Illustration of a scenario where Cyclops guides the user to pick up a water bottle.

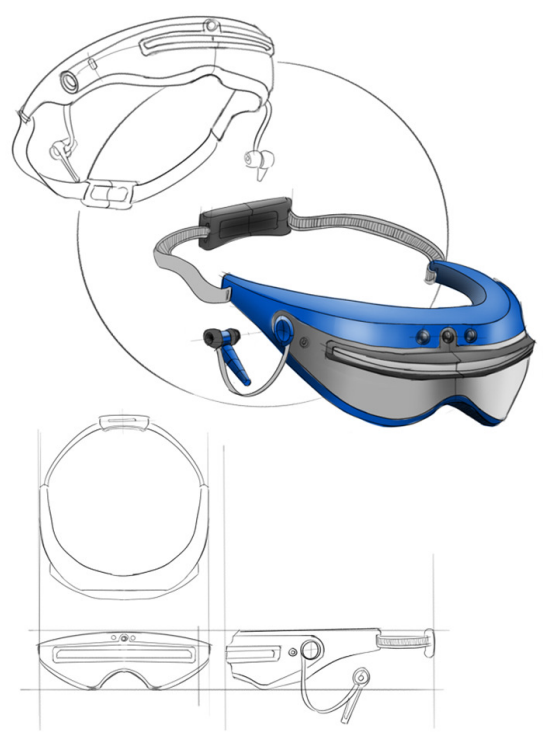

Product sketch for Cyclops.

Implementation Details

Cyclops is developed using OAK-D and Raspberry-Pi3, and the code is developed in python. Processing of the code is divided into two threads using multi-threading. One thread takes care of taking audio input from the user and generating audio feedback. The second thread deals with the acquisition of the data from the OAK-D device.

Speech To Text Conversion

Speech recognition library is used to take user input as an audio signal and convert it to text. Several other speech-to-text libraries were explored, but we used this library because it supports several speech-recognition APIs and engines online and offline. For our experiments, we use the method that supports Google Cloud SpeechAPI to convert audio signal to text.

The generated text is then used to determine the object to be searched. We make use of the identification phrase - ” Cyclops can you see. ” The word after this phrase is stored as the target object to be searched. Hence to search for a bottle, the user needs to say - ” Cyclops can you see bottle”.

Object Detection

Object detection is performed by the OAK-D device connected to the host processor- Raspberry Pi 3B. The depthai API was used to obtain the image data captured by the OAK-D camera as well as the predictions. We use the MobileNetSSD model for object detection. OAK-D also provides depth information which can be combined with the object detection model to determine the distance of the detected objects from the camera.

Depthmap returned by the OAK-D.

Audio Feedback

Once the target object is searched, audio feedback must be generated to guide the user if Cyclops detects the target object.

The output of object detection and the respective distance information is stored in global variables to be accessed by both the vision thread and the audio thread. Based on this information, the audio output is generated to guide the user. For example, if the object is detected and 2 meters away in front of the user, the audio feedback would say, ”Object at 2 meters in front”. This way, the users can orient themselves and move towards the target object.

Google Text-To-Speech (gTTS) library is used to generate audio files from strings, and mpg123 API is used to play the generated audio files.

Project demo video.

Project page template inspired from GradSLAM.